- Storage appliance is on the 10.1.6.0/24 subnet.

- The new vmkernel interface is on the 10.2.6.0/24 subnet. In my lab 10.2.0.0/16 is meant to represent a remote site.

- All VLANs and subnets are defined on a single Cisco router/switch which means that all routes are directly connected.

Configuration of vmkernel interfaces. This does not change (long lines wrapped). iSCSI is defined on vmk1.

[root@VAPELHhost01:/] esxcfg-vmknic --list

Interface Port Group/DVPort/Opaque Network IP Family IP Address Netmask Broadcast MAC Address MTU TSO MSS Enabled Type NetStack

vmk0 Management Network IPv4 10.2.4.10 255.255.255.0 10.2.4.255 2c:59:e5:39:fb:14 1500 65535 true STATIC defaultTcpipStack

vmk1 0206-iSCSI IPv4 10.2.6.10 255.255.255.0 10.2.6.255 00:50:56:62:37:5d 1500 65535 true STATIC defaultTcpipStack

[root@VAPELHhost01:/]

Routing table before fix:

[root@VAPELHhost01:~] esxcfg-route --list

VMkernel Routes:

Network Netmask Gateway Interface

10.2.4.0 255.255.255.0 Local Subnet vmk0

10.2.6.0 255.255.255.0 Local Subnet vmk1

default 0.0.0.0 10.2.4.1 vmk0

My first attempt to fix the problem failed as I used the IP address of the iSCSI vmkernel interface as the destination.

Add (incorrect) static route using vmkernel interface as destination.

[root@VAPELHhost01:~] esxcfg-route --add 10.1.6.0/24 10.2.6.10

Adding static route 10.1.6.0/24 to VMkernel

New (incorrect) routing table. Interface vmk1 has a new route.

[root@VAPELHhost01:~] esxcfg-route --list

VMkernel Routes:

Network Netmask Gateway Interface

10.1.6.0 255.255.255.0 10.2.6.10 vmk1

10.2.4.0 255.255.255.0 Local Subnet vmk0

10.2.6.0 255.255.255.0 Local Subnet vmk1

default 0.0.0.0 10.2.4.1 vmk0

New (incorrect) route fails.

[root@VAPELHhost01:~] esxcli network diag ping --host=TOPOLHNAS01is.local --interface=vmk1

Summary:

Duplicated: 0

Host Addr: TOPOLHNAS01is.local

Packet Lost: 100

Recieved: 0

Roundtrip Avg MS: -2147483648

Roundtrip Max MS: 0

Roundtrip Min MS: 999999000

Transmitted: 3

Trace:

[root@VAPELHhost01:~] esxcli network diag ping --host=TOPOLHNAS01is.local

Summary:

Duplicated: 0

Host Addr: TOPOLHNAS01is.local

Packet Lost: 100

Recieved: 0

Roundtrip Avg MS: -2147483648

Roundtrip Max MS: 0

Roundtrip Min MS: 999999000

Transmitted: 3

Trace:

[root@VAPELHhost01:~]

I realize my mistake and try again, this time using the IP address of the default gateway for the local iSCSI subnet.

Remove incorrect static route.

[root@VAPELHhost01:~] esxcfg-route --del 10.1.6.0/24 10.2.6.10

Deleting static route 10.1.6.0/24 from VMkernel

Add correct route, use default gateway of the local iSCSI subnet.

[root@VAPELHhost01:~] esxcfg-route --add 10.1.6.0/24 10.2.6.1

Adding static route 10.1.6.0/24 to VMkernel

Test new (corrected) static route. Success.

[root@VAPELHhost01:~] esxcli network diag ping --host=TOPOLHNAS01is.local --interface=vmk1

Summary:

Duplicated: 0

Host Addr: TOPOLHNAS01is.local

Packet Lost: 0

Recieved: 3

Roundtrip Avg MS: 524

Roundtrip Max MS: 1036

Roundtrip Min MS: 268

Transmitted: 3

Trace:

Detail:

Dup: false

Host: 10.1.6.10

ICMPSeq: 0

Received Bytes: 64

Roundtrip Time MS: 1037

TTL: 63

Detail:

Dup: false

Host: 10.1.6.10

ICMPSeq: 1

Received Bytes: 64

Roundtrip Time MS: 268

TTL: 63

Detail:

Dup: false

Host: 10.1.6.10

ICMPSeq: 2

Received Bytes: 64

Roundtrip Time MS: 268

TTL: 63

[root@VAPELHhost01:/] esxcli network diag ping --host=TOPOLHNAS01is.local

Summary:

Duplicated: 0

Host Addr: TOPOLHNAS01is.local

Packet Lost: 0

Recieved: 3

Roundtrip Avg MS: 2606

Roundtrip Max MS: 7304

Roundtrip Min MS: 241

Transmitted: 3

Trace:

Detail:

Dup: false

Host: 10.1.6.10

ICMPSeq: 0

Received Bytes: 64

Roundtrip Time MS: 7305

TTL: 63

Detail:

Dup: false

Host: 10.1.6.10

ICMPSeq: 1

Received Bytes: 64

Roundtrip Time MS: 274

TTL: 63

Detail:

Dup: false

Host: 10.1.6.10

ICMPSeq: 2

Received Bytes: 64

Roundtrip Time MS: 242

TTL: 63

[root@VAPELHhost01:/]

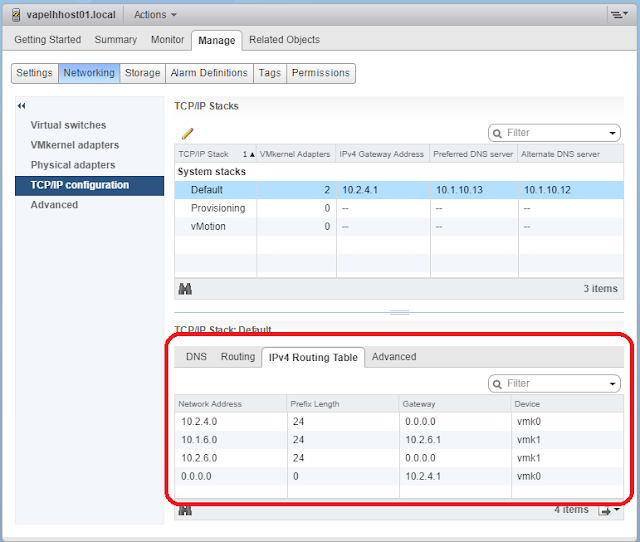

New routing table. Interface vmk1 points to default gateway of remote iSCSI subnet, i.e. 10.2.6.1.

[root@VAPELHhost01:/tmp/iscsi2] esxcfg-route --list

VMkernel Routes:

Network Netmask Gateway Interface

10.1.6.0 255.255.255.0 10.2.6.1 vmk1

10.2.4.0 255.255.255.0 Local Subnet vmk0

10.2.6.0 255.255.255.0 Local Subnet vmk1

default 0.0.0.0 10.2.4.1 vmk0

[root@VAPELHhost01:/tmp/iscsi2]

The final step is to inform vCenter of the changes:

[root@VAPELHhost01:~] /etc/init.d/hostd restart

watchdog-hostd: Terminating watchdog process with PID 109254

hostd stopped.

Ramdisk 'hostd' with estimated size of 803MB already exists

hostd started.

[root@VAPELHhost01:~] /etc/init.d/vpxa restart

watchdog-vpxa: Terminating watchdog process with PID 109940

vpxa stopped.

[root@VAPELHhost01:~]

Then refresh Web Client.

Version of ESXi.

[root@VAPELHhost01:~] vmware -v

VMware ESXi 6.0.0 build-5050593

[root@VAPELHhost01:~]

Done.

References: